Addressing AI's Power Consumption Challenges Fujitsu's Three Energy-Saving Technologies

Article | 2025-07-23

5 minute read

The advancement of AI technologies, such as generative AI, offers transformative opportunities for businesses across various industries. However, the rapid growth in AI usage has resulted in higher power consumption in data centers, which negatively affects the environment. To address this challenge, Fujitsu is actively researching and developing a range of energy-saving technologies for AI infrastructure. This article presents three key technologies: (1) the "AI Computing Broker," which improves GPU efficiency in AI computations, (2) the next-generation CPU "FUJITSU-MONAKA," designed to balance power efficiency with high performance, and (3) liquid cooling technology that manages the rising heat levels in data centers caused by high-density computing for AI workloads .

*The affiliations and the content of this article are valid as of the date of its original publication.

Doubling GPU Utilization and Halving Power Consumption

Explanation from Kouta Nakashima, Head of the Computing Laboratory at Fujitsu Research, Fujitsu Limited.

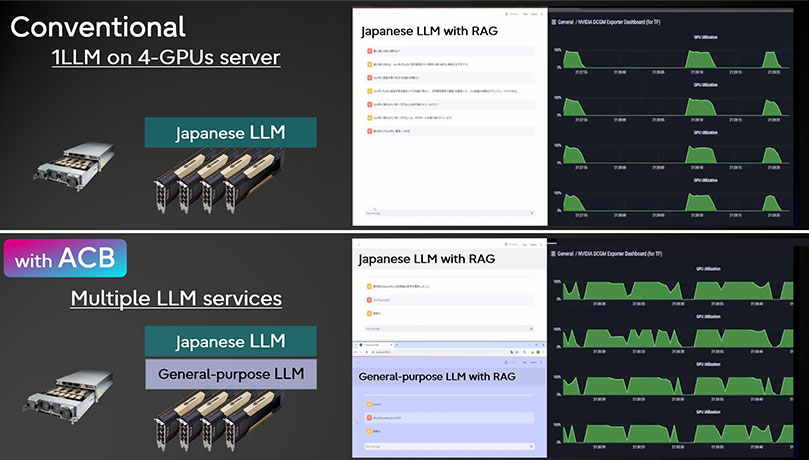

Fujitsu's AI Computing Broker is a software technology that doubles GPU utilization in AI computation. As AI continues to develop and proliferate, data centers are using an increasing number of GPUs. GPUs are in high demand worldwide, and supply is struggling to keep pace. By utilizing the AI Computing Broker, the number of GPUs needed for each computation can be reduced by half, helping to alleviate the GPU supply shortage. Moreover, power consumption is also cut in half. GPUs in AI data centers consume a substantial amount of power, so reducing the number of GPUs significantly contributes to overall energy savings.

In AI programs that process data for learning and inference, GPUs are mainly utilized for computation during these phases. Pre-processing and post-processing tasks that occur before and after learning and inference utilize CPUs, leaving GPUs idle during this time. The AI Computing Broker eliminates this inefficiency by detaching GPUs from the original AI program during CPU processing and reallocating them to other AI programs for computational learning.

Unlike virtualization technology, which shares GPU memory among multiple programs, ACB detaches the GPU along with its memory and reallocates it to other AI programs, effectively eliminating memory constraints. This technology is particularly beneficial in environments with multiple GPU servers, as it enables cross-server reallocation.

The AI Computing Broker is a software solution that can be integrated into AI frameworks, allowing for a 50% reduction in GPU usage. It is compatible with both public cloud environments and on-premises setups, and it is already being used by companies in sectors such as finance, data center operations, and AI image analysis services.

High-Performance and Energy-Efficient Next-Generation CPU

Explanation from Toshio Yoshida, Executive Director of the Advanced Technology Development Unit at Fujitsu Research, Fujitsu Limited.

Since developing Japan's first supercomputer in 1977, Fujitsu has been at the forefront of developing world-class supercomputers and their CPUs. In the AI era, high-performance and energy-efficient computing is required not only for supercomputers but for all data centers.

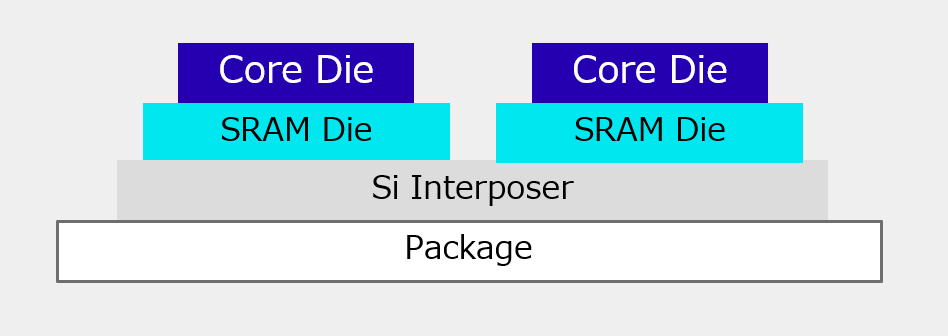

The FUJITSU-MONAKA (*1) is a next-generation CPU developed by Fujitsu that strikes a balance between high performance and energy efficiency. It is anticipated to be utilized across various sectors, including data centers, with shipments set to begin in 2027. To achieve this balance, Fujitsu is advancing its unique technologies, such as Fujitsu own microarchitecture and low-voltage technology, which were developed through the creation of the K computer and the supercomputer Fugaku (*2).

Specifically, the FUJITSU-MONAKA features a "3D Many-Core" architecture, which employs a 3D stack structure for semiconductor circuits. This design minimizes data movement, resulting in low latency and high throughput. Additionally, the semiconductor manufacturing process incorporates unique innovations: the top die utilizes the latest 2-nanometer process for high energy efficiency, while the bottom die employs a cost-effective 5-nanometer process, achieving both energy savings and cost reductions through a hybrid configuration.

A key feature of the FUJITSU-MONAKA is its ability to operate stably at ultra-low voltage. Generally, reducing a CPU's power supply voltage can slow down circuit speeds and complicate stable operation; however, Fujitsu's innovative circuit design effectively addresses these challenges. By lowering the CPU's power supply voltage, power consumption is significantly reduced. These unique technologies allow the FUJITSU-MONAKA to achieve double the application performance (*3) and double the power efficiency (*3) compared to its competitors.

*1) FUJITSU-MONAKA: This is based on results obtained from a project subsidized by the New Energy and Industrial Technology Development Organization (NEDO).

*2) The K computer and the supercomputer Fugaku: jointly developed by RIKEN and Fujitsu.

*3) Based on our performance estimates for the 2027 release. Performance will vary depending on usage conditions, configuration, and other factors.

The Essential Trend of Liquid Cooling in the AI Era and Fujitsu's Expertise in Liquid Cooling Technology

Explanation from Nina Arataki and Hideki Maeda, Senior Directors of the Mission Critical System Business Unit at Fujitsu Limited.

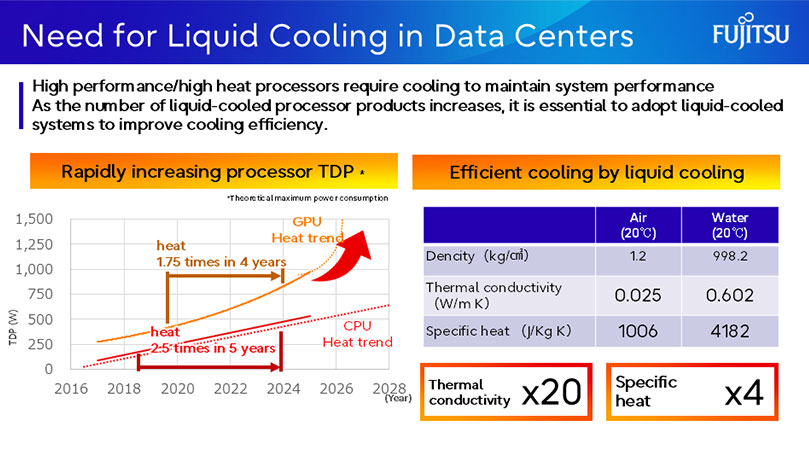

The rapid growth of AI and the resulting surge in computing demand, especially the surge in GPU computation for AI processing, have led to increased power demands in data centers and increased heat generation from servers. To prevent GPU failure, it is essential to maintain low temperatures, which necessitates additional power for cooling. The latest generation of GPUs released in 2025 requires liquid cooling. In this context, "liquid cooling," which offers higher cooling efficiency than traditional air cooling, is gaining attention as a technology to address increased heat generation in data centers and reduce cooling power consumption.

Liquid cooling uses liquid, which has more than 20 times the thermal conductivity of air, to effectively cool servers and racks. Main methods of liquid cooling include "Direct Liquid Cooling (DLC)," where a liquid cooling device (with circulating coolant) directly contacts the processor inside the server to dissipate heat, and "Rear Door Heat Exchanger (RDHX)," where a liquid cooling device is installed on the rear side of a rack with integrated servers to cool exhaust heat.

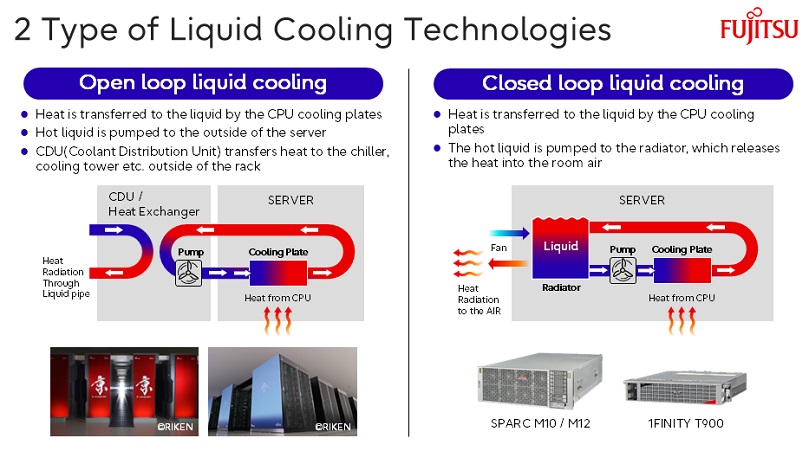

Direct liquid cooling can be implemented through two methods: "open-loop liquid cooling," which transports heated coolant outside the server, and "closed-loop liquid cooling," which moves heated liquid to a radiator within the server to release heat into the room air. Fujitsu possesses both technologies, but particularly in closed-loop liquid cooling, it has developed a world-first technology called "boiling cooling (two-phase cooling)," which cools by boiling the coolant and utilizing latent heat of vaporization. This technology was mass-produced and introduced for mission-critical applications in 2017, doubling the cooling performance compared to traditional closed-loop liquid cooling that uses only liquid (single-phase). It also reduces environmental impact compared to two-phase cooling with insulating refrigerants while ensuring human safety.

Open-loop liquid cooling transports high-temperature liquid from servers to cooling towers or chillers via a Coolant Distribution Unit (CDU). This method allows for high-density server configurations in racks by moving server heat outside the building using liquid, and many general-purpose GPU servers adopt this approach. Fujitsu incorporates its design philosophy of non-stop server systems, achieving hot swap redundancy for all units.

While IT infrastructure for liquid cooling exists, many companies hesitate to implement it due to concerns about potential liquid leaks damaging equipment, impacts on electrical systems below, optimal investment decisions for liquid cooling systems, and challenges related to maintenance and operational expertise.

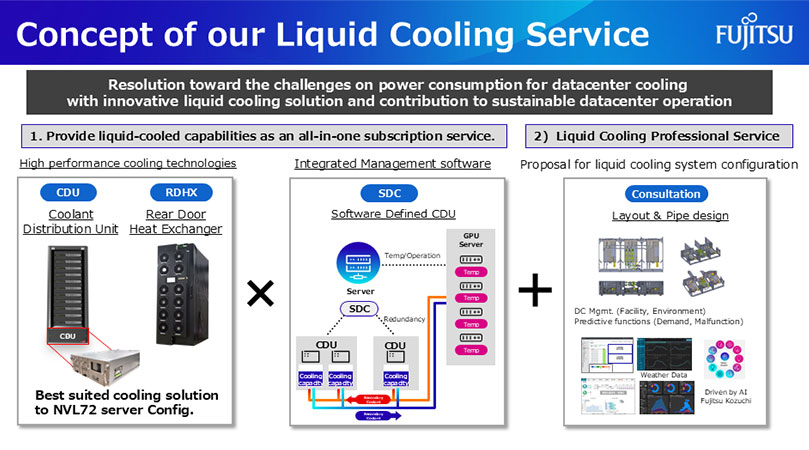

To address these concerns, Fujitsu leverages its liquid cooling technology and expertise to support the design and implementation of optimal cooling facilities (including cooling towers, chillers, and piping) tailored to customers' future server expansion plans as a professional service. Additionally, it offers an all-in-one subscription service that integrates and automatically controls liquid cooling hardware and multiple CDUs, alleviating initial investment and operational challenges. The "Fujitsu Liquid Cooling Management for Datacenter" service is scheduled for release in the first quarter of fiscal year 2025. This liquid cooling software monitors hardware and servers in real-time, controlling multiple CDUs to achieve high reliability, availability, and efficiency for the entire cooling system. These services are available not only to data center operators and companies with their own data centers but also to construction firms building data centers.

In collaboration with Super Micro Computer, Inc., a leading GPU server vendor, and Nidec Corporation, a rack-mounted CDU vendor, Fujitsu is testing the effectiveness of this solution at its Tatebayashi Data Center. The goal is to create a data center environment where customers can achieve world-class Power Usage Effectiveness (PUE)* by the fourth quarter of fiscal year 2025.

Fujitsu's collaboration aims to provide solutions that enable customers to achieve top-level PUE, contributing to energy savings and environmental sustainability in data centers.

* PUE, or Power Usage Effectiveness, is a metric that indicates the energy efficiency of a data center. It is calculated by dividing the total power consumption of the data center by the power consumption of IT equipment. The ideal value is 1.0, indicating that all power is used by IT equipment.

- Press Release: Fujitsu and Supermicro announce strategic collaboration to develop green AI computing technology and liquid-cooled datacenter solutions

- Press Release: Fujitsu expands strategic collaboration with Supermicro to offer total generative AI platform

- Press Release: Fujitsu to offer Fujitsu Cloud Service Generative AI Platform for secure and flexible enterprise data management

Kouta Nakashima

Head of Computing Laboratory, Fujitsu Research, Fujitsu Limited

Joined Fujitsu Research in 2002. Since then, he has been engaged in research and development of High Performance Computing (HPC) technologies, including PC cluster acceleration and high-speed network control technologies. He has held his current position since 2023.

Toshio Yoshida

Executive Director, Advanced Technology Development Unit, Fujitsu Research, Fujitsu Limited

Joined Fujitsu Limited in 1999 and has consistently been involved in cutting-edge CPU development. As a specialist in processor architecture for HPC/AI/data centers, he has led the development of CPUs for UNIX servers and supercomputers like the K computer and Fugaku. He currently leads the development of the high-performance, energy-efficient processor FUJITSU-MONAKA.

Nina Arataki

Senior Director (Business Development and Alliance) of Sustainable Technology Div. Mission Critical System Business Unit, Fujitsu Limited

As a product engineer, she has been responsible for productization of proprietary technologies in hardware, software, and cloud development, filing 35 patents and leading industry groups. She has been involved in launching Fujitsu's AI business and overseas bases, as well as business development through alliances with other companies.

Hideki Maeda

Senior Director (Hardware) of Sustainable Technology Div. Mission Critical System Business Unit, Fujitsu Limited

Since joining the company, he has consistently been engaged in the development of cooling technologies for mission-critical servers and supercomputers. He has experience in productization of various cooling technologies, including air cooling, liquid cooling, and immersion cooling. He currently leads the energy-saving business for data centers, focusing on cooling technologies.

Confronting GPU Shortages head on: The dynamics behind the development of the AI computing broker